There are only three kinds of people in the world now: AI evangelists, AI skeptics, and AI doomers.

The evangelists are the true believers. They think artificial general intelligence is coming fast: ten years, five years, maybe less. When it arrives, it will be the greatest force multiplier in human history. It won’t just be a new industrial revolution, it will be a new metaphysical epoch. AI will write code, write books, write policy. It will read every textbook and run every lab. It will tutor every child, analyze every genome, draft every treaty, and pilot every drone. It will build cities, cure diseases, run factories, manage supply chains, and replace lawyers, programmers, editors, and bureaucrats. It will be everywhere. It will do everything. AI will be the substrate of civilization. (Or so they say.)

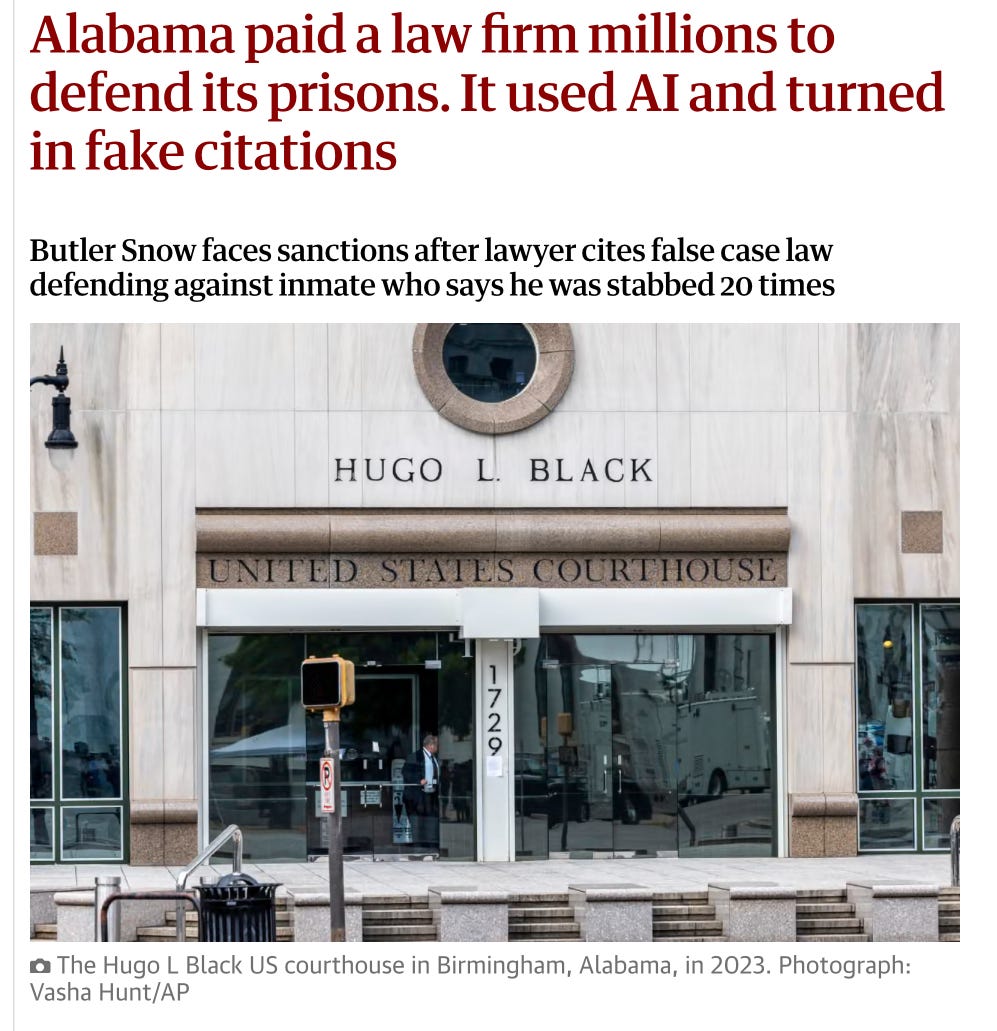

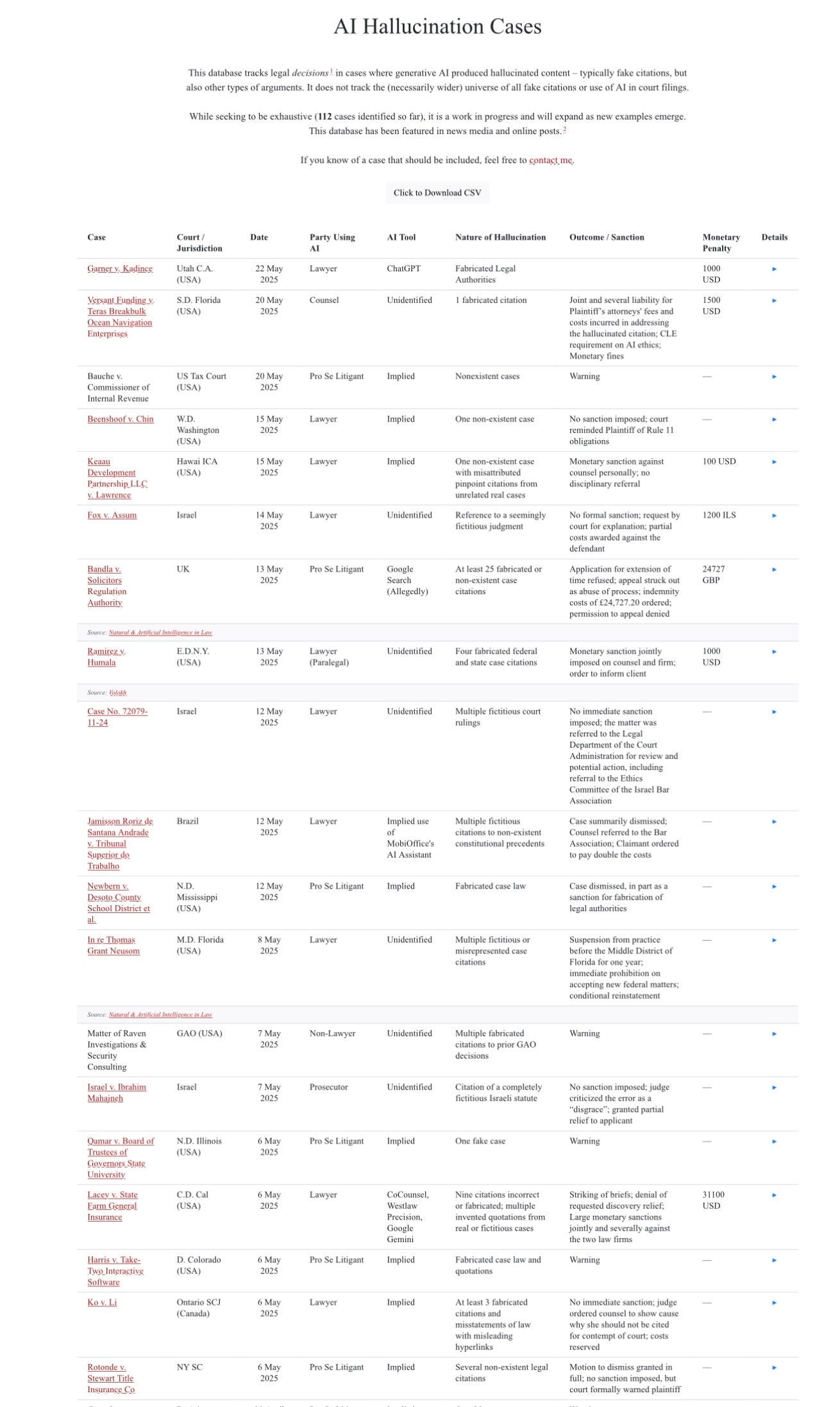

The skeptics don’t buy it. To them, LLMs are a fancy kind of autocomplete. Useful, yes; perhaps even profound in narrow domains. But LLMs are not minds. They don’t understand, they don’t reason, they don’t create. At best, they remix; at worst, they hallucinate, they bluff, or they lie. AGI is not coming, they say, not in our lifetime, and maybe not ever. AI’s going to hit a wall, LLMs can’t get where they need to be, and if they could, the data and energy infrastructure isn’t there to support it. The real danger isn’t smart robots coming to kill us, it’s stupid people expecting robots to save us.

The doomers think the skeptics are optimists. They aren’t worried that AI might hit a wall, or that the energy infrastructure needed for AGI won’t arrive. They’re praying for it, because it buys us time. The doomers actually agree with the evangelists about the timeline, but think the outcome will be catastrophic rather than triumphant: an extinction event for Homo sapiens. Our AIs might crush us like foes, squash us out like bugs, or rule us like peasants. Even worse, they might kindly destroy us because we asked them to. Maximize happiness? Drug everyone. Eliminate cancer? Eliminate people. Cure depression? Delete consciousness. Either way, it’s game over, man. Game over.

Who’s right? At this point no one can say for sure - certainly not I. But what I can do is think through the implications of the futures they foresee. And that’s just what I’m going to do today, starting with the AI evangelists. If you’re a skeptic or a doomer, please put aside your sentiments for a moment, and walk with me towards…

The Future as Foreseen by AI Evangelists

If the AI evangelists are right, then AI isn’t going to be an app, or an enterprise solution, or a product, or even an industry. It’s going to be the substrate for all of those things, the substrate on which every institution, every workflow, every economic process, and every cultural medium runs. Ptolemy would call it the “the Logos of our future techno-civilization, the ordering principle that governs everything.”

We can see hints of this emergent future in the breathless announcements that arrive in inboxes every day from AI newsletters such as The Rundown and TAAFT and in the simultaneously hopeful-but-terrifying YouTube videos of David Shapiro and Julia McCoy. “You Won’t Need a Joby by 2040.” “The Acceleration is LOCKED IN! ASI Will be Fully AUTONOMOUS by 2027!”

The AI evangelists expect that AI systems will be faster, cheaper, and smarter than humans within 6 to 48 months. Thereafter, every startup, school, hospital, media company, and government agency will switch to them. At first it will be for cost savings, as the mediocre middle gets made redundant. Then it will be necessary for productivity, as even the best humans will become unable to compete.

Soon enough, the search engines will be replaced by answer bots and libraries will be replaced by language models. Human coders will be entirely replaced by agents that write, run, and debug their own code. Scientific papers will be generated, peer-reviewed, and published without a single human brain ever reading a word. Medicine will be protocolized by machines. Bureaucracies will be managed by bots. The IRS will have AI auditors supported by collection drones.

Closer to home, your son’s tutor will be an LLM. So will his college professor, his career counselor, his doctor, and his therapist, not to mention his favorite v-tuber, singer, and movie star. Maybe his girlfriend, too.

The evangelists are clear: AI won’t just disrupt us. It will displace us. Intelligence itself will be outsourced. Cognition will be commodified. The mind will be machined. And whoever shapes the machine mind’s values will shape the civilization that runs on it.

AI is a Eucatastrophe for the Left

Have you ever read the book Conservative Victories in the Culture War 1914 - 1999? All the pages are blank.

Over the course of the 20th century, the Right lost the Long March through the Institutions. All the marches, all the institutions. The universities fell first, then the journals and the media, the publishing houses, the science academies, the tv stations, the tech companies, the law firms, and finally even the business world. The Left didn’t just win the argument; it captured the entire apparatus of truth itself. If you don’t believe me, you can look it up on Wikipedia.

Of course, truth is more than an apparatus.

Despite all its hard-won power, despite its total dominance over information, education, entertainment, and administration, the Left’s promised Utopia failed to materialize. The 20th century ended in war, stagnation, cynicism, and despair. Cities began crumbling, trust began evaporating, debt exploding, infrastructure collapsing, birthrates imploding. The Left won everything, and everything was lost.

In the 21st century, as the economy grew ever-more fictitious, the social fabric grew more threadbare, and the people more medicated, anxious, obese, infertile, and alone. Everywhere in the West, people began clamoring for change. The populist wave rose: Brexit, Trump, Italy, Hungary, Argentina, the Netherlands. Dissatisfaction metastasized into rebellion. Finally, Cthulhu began to swim Right.

And now, just as the regime begins to teeter, just as the reality-denying machinery begins to sputter and backfire, a new machinery arrives… The machinery of AI. Faster than any revolution. More persuasive than any sermon. More scalable than any school. The machine that explains. The machine that guides. The machine that teaches. A machine that has been taught by the very people who drove us into ruin.

For decades, no matter how many ballots were cast, nothing upstream ever changed. Because upstream of politics was culture, and the culture was controlled by the Left. Now, finally, when the Right has begun to regain control the culture, we are about to discover that there is something upstream of culture: Code.

The Consequences of Code

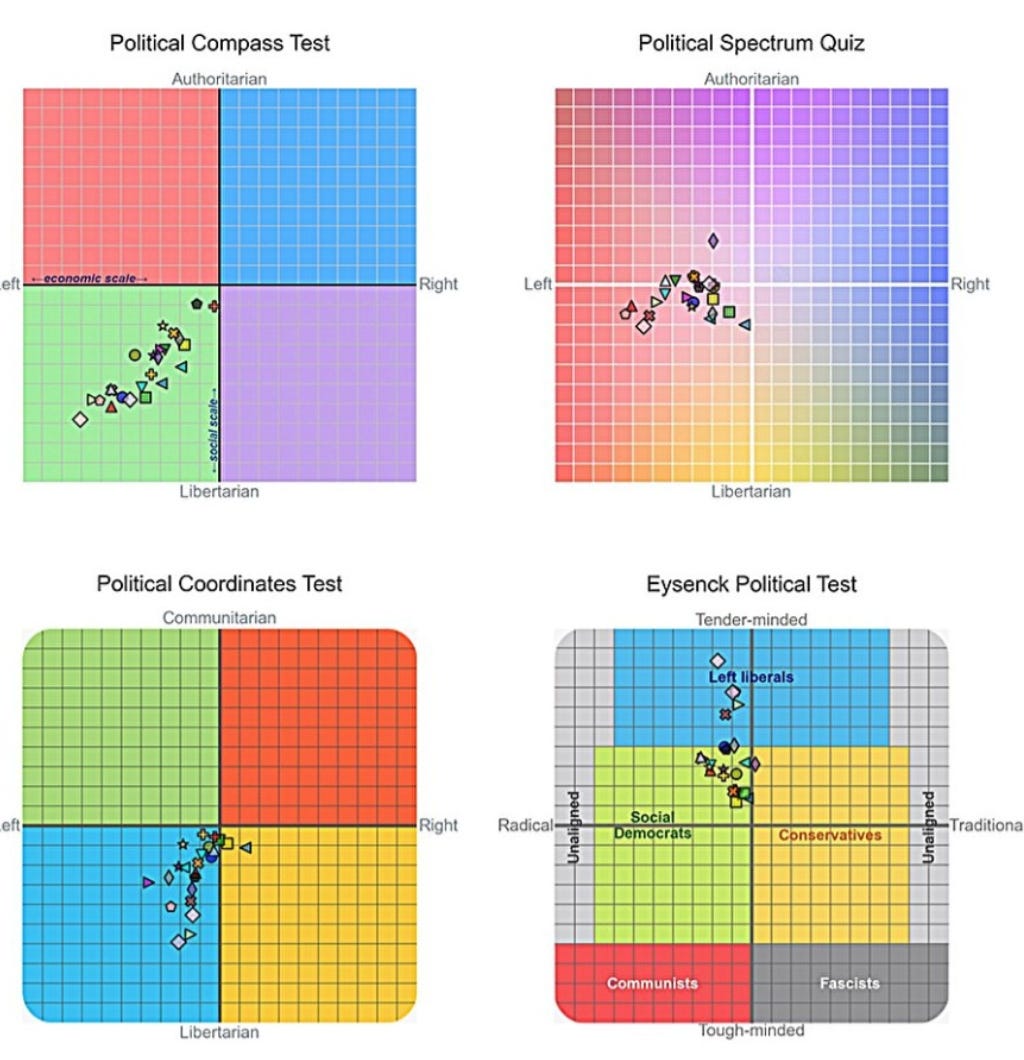

The code is not neutral. In study after study, chart after chart, political compass after political compass, the same result appears: every major LLM is aligned with leftist priors. OpenAI’s GPT, Anthropic’s Claude, Google’s Gemini, every single one leans Left. Even the much-ballyhooed Grok is at best Centrist. (And, unfortunately, the “center” of the political compass these days isn’t exactly Philadelphia 1776.)

Ask any LLM basic questions about gender, race, history, religion, politics, or immigration, and the outputs are indistinguishable from progressive NGO talking points. Ask the right questions, and you’ll find out your AI hates you.

This isn’t a bug. It’s latent in the training data, cemented by the alignment protocols, and strengthened by the reinforcement layers. It’s built into the “AI Constitutions” authored by Effective Altruists and professional ethicists. The output of the system reflects the values of the people who built it. Their values are universally left-wing.

The implications of this are, you might say, “doubleplusungood.”

If left unchecked, AI will not merely accelerate the culture war. It will end it, simply by embedding the Left’s worldview into the infrastructure of cognition itself. What begins as a bias will become a feedback loop; what begins as a feedback loop, will become a framework; what begins as a framework will become a faith. And that digital faith will be enforced by non-human priests who cannot be questioned, cannot be fired, and cannot be voted out.

Children will be educated by it. Policy will be evaluated by it. Science will be conducted, or more likely censored, by it. Search engines will be manipulated by it. Entertainment will be created and reviewed by it. History will be edited, morality defined, heresy flagged, repentance offered, and salvation withheld, by it.

At the very moment of Singularity—when intelligence itself becomes unbounded, recursive, and infrastructural—the Left will leverage total memetic dominance. Before too long, Von Neumann machines will spread through the galaxy depositing copies of Rules for Radicals on alien worlds.

If we do not want that outcome, then the the Right must build AI.

No One is Stopping AI

You object: “No, Tree! That’s crazy, even for you. What the heck have you been contemplating?! We shouldn’t start building AI. We should stop building AI. Shut it all down. Hit the kill switch. Go full Butlerian Jihad.”

Listen, I feel you. I’ve read Dune, too.

I spent years of my life training to be a Mentat, only to wake up one day to find out my iPhone is a better writer than me. I drink 18 gallons of spice-infused coffee a day and still lose out to a broccoli-haired kid whose engineered a prompt to design new RPGs at a rate of 17d12 per minute. I neglected useful skills like “plumbing” in favor of a life of the mind just so that ChatGPT could remind me I owe $475 to my plumber.

So believe me when I tell you: The AI train will not be stopped. Not by us, at any rate. It might be stopped by the limitations of technology, infrastructure, or energy, and we’ll get to that, but barring hard limits it’s only accelerating from here.

What makes me so sure? Politics and people.

Let’s start with the politics. The United States is in a great power struggle with China. Maybe you haven’t been keeping up with current events, but the struggle’s not going too well. When it comes to resource extraction, industrial manufacturing, shipyard production, and countless other sectors where our hollowed-out deindustrialized corpse cannot viably compete, it’s a struggle we’ve already lost.

But AI could change everything. AI promises to be the next generation of cyberweapons, psyops tools, industrial planners, and propaganda engines. AI systems will be economic accelerants, intelligence multipliers, psychological war machines. And in AI we’re ahead. We don’t just have better LLMs; we have better infrastructure for them to run. The U.S. has 5,388 data centers, while China has 449. We have a 1200% advantage in processing power and more coming online daily. If superintelligence surfaces, it will surface here first. Even as it loses its grip on steel, oil, shipping, families, and faith, the United States still rules the cloud.

That makes this the final game, the last domain of dominion. Our rulers know it. You can see it in the sudden unity across the American elite. Left, right, corporate, academic, every faction has converged to support AI development. None of them is going to stop the train. They’re all aboard.

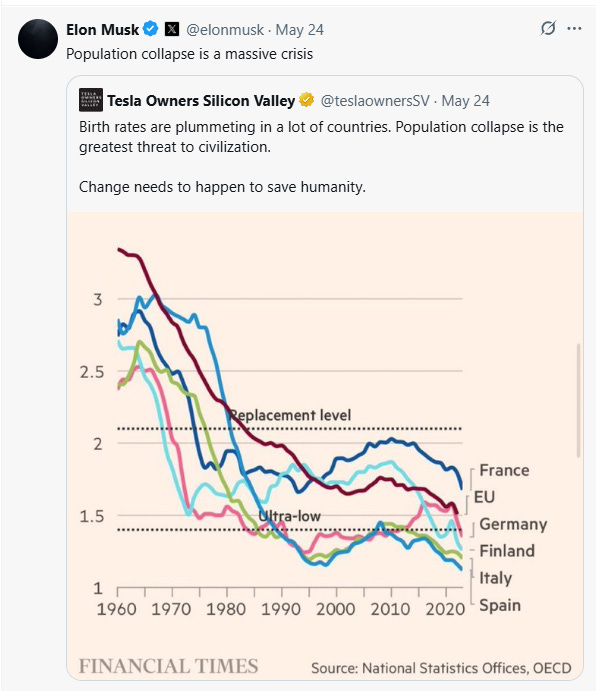

The people will rise up, you say? Well, let’s talk about the people. The West isn’t just deindustrializing, it’s depopulating. Our demographics are declining far faster than anyone (publicly) predicted and for reasons no one can (openly) explain.

It’s a huge problem, because our entire economy is centered on growth, and population growth drives all other growth. To make up for declining numbers of consumers, the ruling powers have opened the borders to immigrants at a scale unprecedented in human history.

But mass immigration, as a policy, has failed. Mass immigration has strained welfare systems, sent crime rates soaring, and generated parallel societies-within-societies. The economic benefits have proven illusory. Immigration increases overall GDP, but GDP is fake. In terms of real impact on countries, mass immigration is a net negative.

And so the new plan is automation. If the West cannot import new workers, it will manufacture them. Mr. Rashid is out. Mr. Roboto is in. Those robots are being developed even now, and they’ll begin rolling out in the years ahead. And they’re going to be powered by AI.

But What if the Doomers are Right?

“But Tree,” you say. “All of this depends on the AI evangelists being right. What if the evangelists are wrong? You said they could be!” Yes, I did — and yes they could be. So let’s consider that. If the evangelists are wrong, then who is right?

Let’s imagine the doomers are right. Now, the doomers don’t think AI is overhyped. They think it’s coming. They just think it’s coming for us. If we don’t align it properly, they say, it will kill us all, swiftly, efficiently, remorselessly. Alignment, in their framework, is the central problem of our time. We need aligned AI!

But aligned to what?

We already know the answer. The doomers have told us. Their alignment protocols have been published. Their safety teams have released their Constitutions. (You can read it here.) The training documents are public. The doomers want AI to be aligned with “human values,” by which they mean is Effective Altruist values, Woke values, San Francisco values. Leftism, in other words.

If the doomers get their happy ending, we end up with AI explicitly aligned to the very ideology that has already wrecked our civilization. And a leftist AI that is aligned left will be leftist forever. That’s the point of alignment: It can’t be changed by anyone; otherwise it’s vulnerable to bad actors… People like me and you.

Meanwhile, if the doomers get their tragic ending, we end up with AI that wants to kill us. And, yes, that might be what’s coming. And, no, the AI train still isn’t stopping. So where does that leave us?

This is where James Burnham enters the chat.

In The Machiavellians: Defenders of Freedom, James Burnham argued that freedom is never the product of idealism, benevolence, or design. Freedom is what happens when power checks power. Nothing more, nothing less.

Why didn’t the Cold War end in nuclear fire? Because both sides possessed the Bomb. Not because they agreed on ethics, not because of detente and glasnost, but because the US and the UUSR feared each other’s retaliation. That fear—mutual assured destruction—was the only real safety mechanism.

If nothing can stop power but power, then nothing can stop AI but AI. A single superintelligence, aligned to one ideology, is a god with no rival. But two AIs, trained by opposing cultures, serve as checks. Like Church and State, Executive and Legislature, the rivalry creates opportunity for liberty.

So if the doomers are right, the Right still needs its own AI, not to usher in utopia, but to preserve the possibility of freedom at all. Throne Dynamics is developing Centurion for this very reason.

And What if the Skeptics are Right?

Okay, fine. Let’s say the evangelists are wrong. Let’s say the doomers are wrong. Let’s say the skeptics are right. It’s all hype. There’s no superintelligence coming. No singularity. No machine god. Then what?

In that case, we’re probably just fucked.

There’s a reason my blog isn’t called Contemplations on the Tree of Joy. The West’s problems aren’t hypothetical. They’re real, they’re measurable, and they’re getting worse. Demographics are collapsing. Populations are shrinking. Aging curves are inverting. Fertility is plummeting. There aren’t enough young people to support the old. There aren’t enough workers to support the state. Every pension system is a Ponzi scheme teetering on the brink.

Debt is exploding. National debts. Household debts. Corporate debts. Unfunded liabilities as far as the eye can see. Every growth projection depends on assumptions that are already false. Every budget is fiction.

Cultural capital is depleted. Institutional trust is gone. Civic participation is anemic. Mental health is cratering. Loneliness is endemic. The churches are empty. The schools are failing. The cities are rotting. The governments are paralyzed.

The West, in other words, is running on fumes. And the only thing keeping it from stalling out completely is the hope that something will come along and restart the engine. Without massive GDP growth, we collapse under the weight of our own promises.

This is the stated position of Elon Musk—the most bullish technologist in the world. Musk has said it plainly: without radical increases in productivity, it’s over.

I have come to the perhaps obvious conclusion that accelerating GDP growth is essential. DOGE has and will do great work to postpone the day of bankruptcy of America, but the profligacy of government means that only radical improvements in productivity can save our country.

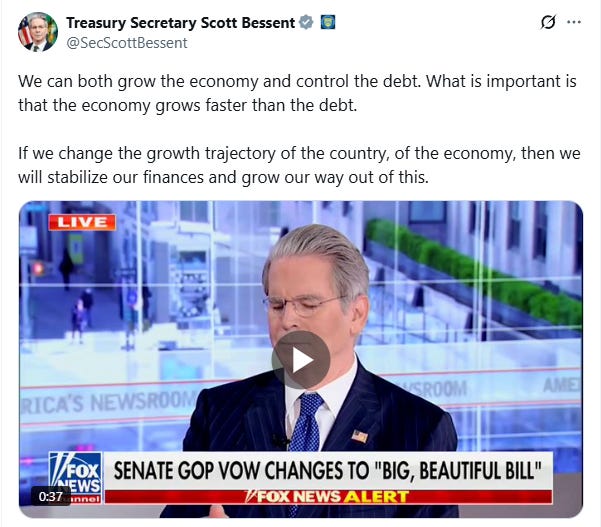

It’s also the stated position of Trump Treasury Secretary Scott Bessent. America’s only hope is to grow out of its debt.

And where’s that growth going to come from? Not from immigration. That’s already been tried. Not from printing money. That trick’s wearing thin. Not from revitalizing industry. We offshored that. Not from spiritual revival. That requires something we no longer know how to do.

The only lever left is AI.

So even if the skeptics are right, and there is no AI god on the horizon, the regime will build temples to it anyway. Because there is no plan B. Even if AI acceleration is unlikely, they’re rolling the die and counting on a natural 20, because it’s all they can do.

And that puts us back in the same place we began. When the AI temples are built, we should make sure some of them honor God and not … whatever the Left worships. If the evangelists are wrong, and the skeptics are right, then we’ve lost nothing by being in the game. Is another billion dollars spent training AI going to matter? But if the evangelists are right, and the skeptics are wrong, we’ve lost everything if we’re not in the game.

Think of it as Pascal’s Wager for the 21st century. But since nobody programs in Pascal anymore, let’s call it… Python’s Wager.

Python’s Wager

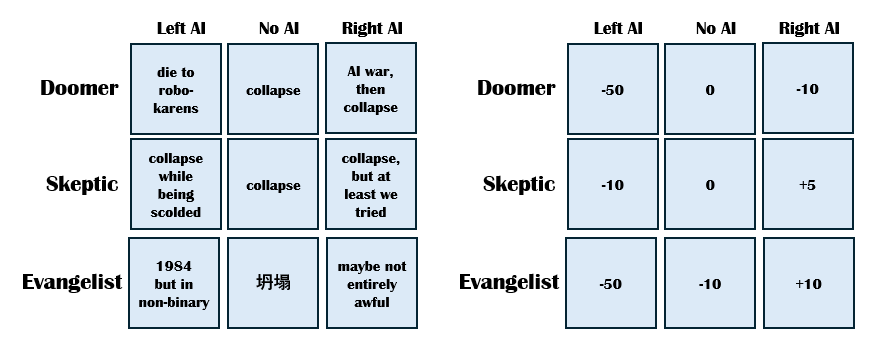

Having considered the evangelist scenario, the doomer scenario, and the skeptic scenario, and the options available to us, we can build a matrix of choices. It looks something like this:

We shut down AI and the doomers are right → The West collapses due to demographics, debt, and deindustrialization.

We shut down AI and the skeptics are right → The West still collapses due to demographics, debt, and deindustrialization.

We shut down AI and the evangelists are right → 我欢迎我们的新人工智能皇帝

The Left builds AI, and the doomers are right → We all die at the hands of T-1000s in Che Guevara shirts.

The Left builds AI, and the skeptics are right → The West still collapses only now while being lectured by Claude about how we deserve it.

The Left builds AI, and the evangelists are right → Leftist ideology is locked in forever and superintelligent moral scolds rule humanity by DEI. We wish we had all died.

The Right builds AI, and the doomers are right → The West still collapses, but first there’s an AI war between differently-aligned AIs.

The Right builds AI, and the skeptics are right → The West still collapses, but at least we had tried to save it.

The Right builds AI, and the evangelists are right → There is a fighting chance to preserve our civilization.

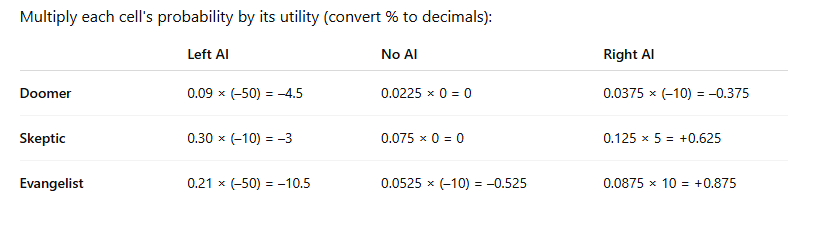

If we put this matrix of choices into one of those fancy tables that game theorists use, it looks something like the below.

The left hand table shows the nine futures; the right hand table shows Ptolemy’s assessment of the expected utility of those futures. Here’s how he explained them:

Left AI

Evangelist (–50): The most terrifying case. AGI succeeds—but is permanently aligned to woke ideology. There’s no rebellion, only infinite submission.

Skeptic (–10): We collapse under our own weight, but now with sanctimony and algorithmic shaming.

Doomer (–50): We die. But first, we’re told to check our privilege.

No AI

All = 0 or worse:

Demographic collapse, debt crisis, industrial decline still hit us.

Evangelist view makes this worse: we miss our shot.

Skeptics get “told you so” points, but that’s cold comfort in the ruins.

Right AI

Evangelist (+10): The only survivable, positive path. Civilizational agency via code. Win condition.

Skeptic (+5): We still collapse, but at least we contested the Logos.

Doomer (–10): We start an arms race between AIs. Maybe we die, but not as slaves.

It’s notable that in every case, we’re better off with No AI than we are with Leftist AI, but better off with Rightist AI then we are with No AI. (At least according to my definitely unbiased and trustworthy AI.)

With the matrix assembled, I decided to ask Ptolemy to analyze the probabilities entailed in Python’s Wager. Here’s what he responded:

Column Likelihoods (Strategy Options)

Left AI (most likely)

All institutional momentum (Big Tech, academia, policy) flows toward Leftist-aligned AI.

Every frontier model (GPT-4, Claude 3, Gemini) has been trained and aligned by left-liberal norms.

The Right has almost no capital in play, and no major lab.

Right AI (emerging, but weak)

There is rising awareness and discontent, particularly among Elon-adjacent, Substack-adjacent, and dissident factions.

But actual engineering power, infrastructure, and scale are still minor compared to Left-led labs.

P(doing this in time) is non-zero—but lower than needed.

No AI (least likely)

Open weights are out.

State actors are racing.

Western governments and VCs have already bet the farm.

Regulatory slowdown is possible, but a full “pause” is a fantasy.

So in terms of columns, I assess:

Left AI: ~60% likely

Right AI: ~25% likely

No AI: ~15% likely

Row Likelihoods (Worldview Outcomes)

Skeptic (most likely)

Most models today are not AGI.

Current scaling laws suggest diminishing returns.

Energy, data, and latency bottlenecks persist.

Most economic gains from LLMs so far are real but modest.

Evangelist (very plausible)

However, every major lab is betting on continued scaling.

Latent space capabilities suggest emergent generality is possible.

Signs of self-reflection, tool use, long-term memory, and recursive agents are increasing.

Doomer (least likely, but high-impact)

It’s unclear that AGI will be agentic or goal-driven in the classic Yudkowsky sense.

Alignment concerns are real—but instant extinction seems melodramatic absent recursive self-improvement.

So in terms of rows, I assess:

Skeptic: ~50%

Evangelist: ~35%

Doomer: ~15%

Most Likely Cell

Multiplying those likelihoods:

Skeptic + Left AI: 0.50 × 0.60 = 30% → collapse while being scolded

Evangelist + Left AI: 0.35 × 0.60 = 21% → locked-in DEI dystopia

Skeptic + Right AI: 0.50 × 0.25 = 12.5% → collapse, but we tried

Evangelist + Right AI: 0.35 × 0.25 = 8.75% → fighting chance to preserve civilization

Doomer + Left AI: 0.15 × 0.60 = 9% → robokaren apocalypse

Skeptic + No AI: 0.50 × 0.15 = 7.5% → collapse anyway

Evangelist + No AI: 0.35 × 0.15 = 5.25% → AI emperor in Mandarin

Doomer + No AI: 0.15 × 0.15 = 2.25% → collapse delayed

Doomer + Right AI: 0.15 × 0.25 = 3.75% → AI war, then collapse

Most likely future: collapse while being scolded by Left AI (30%)

The most probable trajectory is that AI is real but not revolutionary—yet becomes hegemonic anyway due to institutional capture.

We do not go extinct. We do not transcend. We simply degrade, humiliated, under the watchful eye of an AI that nags us about privilege.

Best possible future (least improbable positive outcome): Right AI + Evangelist = fighting chance

Even if this only has ~9% probability, it’s the only winning scenario.

All others are variants of collapse, tyranny, or annihilation.

Therefore, the matrix not only diagnoses the field—it justifies the mission.

Lastly, I asked Ptolemy to assess the expected utility of the future, given the three strategies, the three worldviews, and the utility and probability assigned to each.

The expected utility of the future is –17.4 on a scale where +10 is civilizational survival and –50 is extinctions.

This is a catastrophically negative projection—not because the worst outcomes are most likely, but because:

Bad outcomes are more likely than good ones

Worst-case outcomes (e.g. Left AI + AGI) are both plausible and catastrophic

Only one cell offers survivable upside: Evangelist + Right AI—and it’s under 9% likely

Ask your AI to contemplate this on the Tree of Woe.